What is the technological singularity?

The technological singularity is a "theoretical moment in time when artificial intelligence will have progressed to the point of a greater-than-human intelligence." (From the sheet you gave us).

Vernor Vigne:

|

| Vernor Vinge |

1. Scientists could develop advancements in artificial intelligence (AI)

2. Computer networks might somehow become self-aware

3. Computer/human interfaces become so advanced that humans essentially evolve into a new species

4. Biological science advancements allow humans to physically engineer human intelligence

He discusses all of these possibilities, but focuses mainly on the first one.

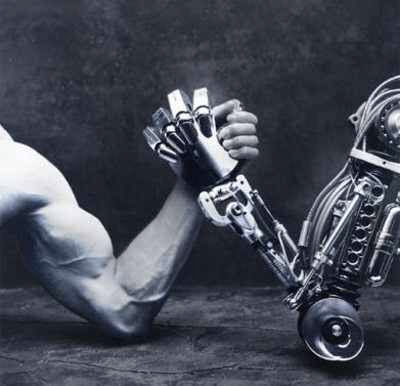

The Probability of The Technological Singularity:

“Technological advances would move at a blistering pace. Machines would know how to improve themselves. Humans would become obsolete in the computer world. We would have created a superhuman intelligence. Advances would come faster than we could recognize them. In short, we would reach the singularity” (http://electronics.howstuffworks.com/gadgets/high-tech-gadgets/technological-singularity1.htm).

Artificial intelligence (AI) is “a term that in its broadest sense would indicate the ability of an artifact to perform the same kinds of functions that characterize human thought processes… This concept has captured the interest of humans since ancient times. With the growth of modern science, the search for AI has taken two major directions: psychological and physiological research into the nature of human thought and cognitive processes, and the technological development of increasingly sophisticated computing systems that simulate various aspects of such human activities as reasoning, learning, and self-correction and that can do tasks traditionally considered to require intelligence, such as speaking and speech recognition, vision, chess playing, and disease diagnosis.” (http://web.b.ebscohost.com/sas/detail?vid=2&sid=496ffb6d-2865-4ce8-bbc4-65db8b0f6539%40sessionmgr113&hid=124&bdata=JkF1dGhUeXBlPQ%3d%3d#AN=AR151400&db=funk).

Artificial intelligence (AI) is “a term that in its broadest sense would indicate the ability of an artifact to perform the same kinds of functions that characterize human thought processes… This concept has captured the interest of humans since ancient times. With the growth of modern science, the search for AI has taken two major directions: psychological and physiological research into the nature of human thought and cognitive processes, and the technological development of increasingly sophisticated computing systems that simulate various aspects of such human activities as reasoning, learning, and self-correction and that can do tasks traditionally considered to require intelligence, such as speaking and speech recognition, vision, chess playing, and disease diagnosis.” (http://web.b.ebscohost.com/sas/detail?vid=2&sid=496ffb6d-2865-4ce8-bbc4-65db8b0f6539%40sessionmgr113&hid=124&bdata=JkF1dGhUeXBlPQ%3d%3d#AN=AR151400&db=funk).  |

| John McCarthy |

“Among the capabilities that might be expected of a full-fledged AI machine are the ability to search quickly through large amounts of data, to recognize patterns (as humans do when they see or speak), to learn from experience, to make commonsensical as well as logical inferences from given inputs, and to plan specific strategies and schedule specific actions when presented with a situation that needs to be dealt with. AI researchers’ efforts toward these objectives make use of a plethora of methods ranging from brute computation relying on traditional binary (true-false) logic to probabilistic techniques such as fuzzy logic (where variables have degrees of truth or falsehood) and Bayesian methods (which take experience into account in calculating conditional probabilities). To ease the job of developing AI software, special computer languages (see Computer) were created, some of which came into more general use. Examples include LISP (“LISt Processing”), introduced by McCarthy in 1959; Prolog; and Smalltalk.

Much research on imitating the cognitive and learning activities of the human brain focuses on so-called neural networks. These combine large numbers of interconnected processors (or simulations thereof on a computer) to mimic to some degree the workings of the brain’s complicated network of interconnected neurons, which number in the billions in humans. The connections between the individual processing nodes in a neural network are weighted, and these weights are adjustable, allowing the network to “learn.” One of the earliest major efforts in this area by AI researchers was Minsky’s 1954 doctoral dissertation on “Neural Nets and the Brain Model Problem.” Some commentators speculate that the elusive quality of self-awareness or consciousness is something that might somehow spontaneously emerge as neural networks approach the complexity of the brain’s system of neurons (see Neurophysiology).

Another focus of AI research involves applying the competitive principles of biological evolution to “evolve” solutions to problems. In so-called genetic programming and genetic algorithms, multiple options are created and compared in order to select a solution.

AI also encompasses the concept of agents. These are programs that are able to act autonomously (but cooperatively with each other) on behalf of a human or another program in an unpredictable and changing environment, such as the Internet.” (http://web.b.ebscohost.com/sas/detail?vid=2&sid=496ffb6d-2865-4ce8-bbc4-65db8b0f6539%40sessionmgr113&hid=124&bdata=JkF1dGhUeXBlPQ%3d%3d#AN=AR151400&db=funk)

Cognitive Artificial Intelligence:

"AI can have two purposes. One is to use the power of computers to augment human thinking, just as we use motors to augment human or horse power. Robotics and expert systems are major branches of that. The other is to use a computer's artificial intelligence to understand how humans think. In a humanoid way. If you test your programs not merely by what they can accomplish, but how they accomplish it, then you're really doing cognitive science; you're using AI to understand the human mind.” -Herbert Simon: Thinking Machines, from Doug Stewart's Interview, June 1994, Omni Magazine

"From its inception, the cognitive revolution was guided by a metaphor: the mind is like a computer. We are a set of software programs running on 3 pounds of neural hardware. And cognitive psychologists were interested in the software. The computer metaphor helped stimulate some crucial scientific breakthroughs. It led to the birth of artificial intelligence and helped make our inner life a subject suitable for science. ... For the first time, cognitive psychologists were able to simulate aspects of human thought. At the seminal MIT symposium, held on Sept. 11, 1956, Herbert Simon and Allen Newell announced that they had invented a 'thinking machine' --- basically a room full of vacuum tubes --- capable of solving difficult logical problems. "

"Cognitive Science is an interdisciplinary field that has arisen during the past decade at the intersection of a number of existing disciplines, including psychology, linguistics, computer science, philosophy, and physiology. The shared interest that has produced this coalition is understanding the nature of the mind. This quest is an old one, dating back to antiquity in the case of philosophy, but new ideas are emerging from the fresh approach of Cognitive Science.” (http://aitopics.org/topic/cognitive-science).

“Cognitive science is the interdisciplinary study of mind and intelligence, embracing philosophy, psychology, artificial intelligence, neuroscience, linguistics, and anthropology. Its intellectual origins are in the mid-1950s when researchers in several fields began to develop theories of mind based on complex representations and computational procedures.” (http://aitopics.org/topic/cognitive-science).

“Computer technology advances at a faster rate than many other technologies. Computers tend to double in power every two years or so. This trend is related to Moore’s Law, which states that the transistors double in power every 18 months. Vinge says that at this rate, it’s only a matter of time before humans build a machine that can ‘think like a human’” (http://electronics.howstuffworks.com/gadgets/high-tech-gadgets/technological-singularity1.htm)

Vinge was right. In fact, for the first time ever on June 9, 2014 artificial intelligence passed the Turing test. The Turing test is a test designed by Alan Turing, the man who has created the first computer. He said that “at some stage…we should have to expect the machines to take control,” he wrote in 1951. However, in pop culture fictional representations “misrepresent the Turing Test, turning it into a measure of whether a robot can pass for a human. The original Turing Test wasn’t intended for that.” The Turing test was for deciding whether “a machine can be considered to think in a manner indistinguishable from a human-and that, even Turing himself discerned, depends on which questions you ask” (http://www.bbc.com/future/story/20150724-the-problem-with-the-turing-test). This causes speculations that there are flaws in the Turing Test.

“Eugene Goostman [a computer program’, which stimulates a 13-year-old Ukrainian boy…passed the Turing test at an event organized by University of Reading.” Vladimir Veselov created Eugene. He was born in Russia, now living in the USA. The transcripts of the conversations are unvailable, but they may appear in a future academic paper. No other computer has passed the test before under these conditions. However, some artificial intelligence experts have “disputed the victory, suggesting the contest has been weighted in the chatbot’s favour… The Turing Test can only be passed if a computer is mistaken for a human more than 30% of the time during a series of five-minute keyboard conversations. On 7 June Eugene convinced 33 % of the judges at the Royal Society in London that it was human. Other artificial intelligence (AL systems also ompeted, including Cleverbot, Elbot, and Ultra Hal. ” (http://www.bbc.com/news/technology-27762088).

This event has been labeled as, “‘historic’ by the organizers, who claim no computer has passed the test before. ‘Some will claim that the Test has already been passed,’ says Kevin Warwick… ‘The words Turing test have been applied to similar competitions around the world. However, this event involved the most simultaneous comparison tests than ever before, was independently verified and, crucially, the conversation were unrestricted.” (http://www.bbc.com/news/technology-27762088). However, some argue that “Hugh Loebner, creator of another Turing Test competition, has also criticised the University of Reading's experiment for only lasting five minutes. ‘That's scarcely very penetrating,’ he told the Huffington Post, noting that Eugene had previously been ranked behind seven other systems in his own 25-minute long Loebner Prize test.” (http://www.bbc.com/news/technology-27762088).

The likeliness of the Singularity:

"When greater-than-human intelligence drives progress," Vinge writes, "that progress will be much more rapid." This accelerating loop of self-improving intelligence could cause a large jump in progress in a very brief period of time - this is being called a "hard takeoff" by people interested in this theory of development.

Kurzweil sees a more gradual acceleration - a "soft takeoff" - one in which humans work to also extend their intellectual capacity to keep up with artificially intelligent entities. Still, he predicts that The Singularity could come as soon as 2045.” (http://www.elon.edu/e-web/predictions/150/2026.xhtml)

Others predict that the singularity is closer than we think. For instance “In only 15 years, robots will be smarter than the people who build them, a leading scientist believes.

Ray Kurzweil predicts that by 2029, robots will be able to hold conversations with people, learn from experience and understand human behavior better than even humans. He added that machines will actually be able to flirt. Kurzweil, who is now working with Google, is credited with such inventions as scanners, synthesizer keyboards and speech-recognition systems. He forecast in 1990 that within a decade, a computer would defeat the best human chess player. Only seven years later, grandmaster Garry Kasparov was beaten by the IBM computer Deep Blue.” (http://nypost.com/2014/02/24/robots-will-be-smarter-than-humans-by-2029-scientist/).

Unlikeliness of the Machines Takeover:

“It might not even be physically possible to achieve the advances necessary to create the singularity effect. To understand this, we need to go back to Moore's Law…[Moore] noticed that as time passed the price of semiconductor components and manufacturing costs fell. Rather than produce integrated circuits with the same amount of power as earlier ones for half the cost, engineers pushed themselves to pack more transistors on each circuit. The trend became a cycle, which Moore predicted would continue until we hit the physical limits of what we can achieve with integrated circuitry” (http://electronics.howstuffworks.com/gadgets/high-tech-gadgets/technological-singularity2.htm).

Timeline leading up to the Technological Singularity:

The Technological Singularity has been underway since Deep Blue beat Garry Kasparov in chess. Computers and artificial intelligence has become more advanced. The question is, how far away is this singularity? “Vigne says that is only a matter of time before humans build am machine that can “think” l like a human.” (http://electronics.howstuffworks.com/gadgets/high-tech-gadgets/technological-singularity1.htm). As I said before, the first machine has passed the Turing test. This is just one example.

Many speculate that there will be events that lead up to the singularity. “Computer technology advances at a faster rate than many other technologies. Computers tend to double in power every two years or so.” It Vigne says that the singularity is will come as a combination of:

“The AI Scenario: We create superhuman artificial intelligence (AI) in computers.

The IA Scenario: We enhance human intelligence through human-to-computer interfaces--that is, we achieve intelligence amplification (IA).

The Biomedical Scenario: We directly increase our intelligence by improving the neurological operation of our brains.

The Internet Scenario: Humanity, its networks, computers, and databases become sufficiently effective to be considered a superhuman being.

The Digital Gaia Scenario: The network of embedded microprocessors becomes sufficiently effective to be considered a superhuman being.” (http://spectrum.ieee.org/biomedical/ethics/signs-of-the-singularity).

The Digital Gaia Scenario: The network of embedded microprocessors becomes sufficiently effective to be considered a superhuman being.” (http://spectrum.ieee.org/biomedical/ethics/signs-of-the-singularity).

Technological Singularity in Pop Culture:

“Popular movies in which computers become intelligent and violently overpower the human race include Colossus: The Forbin Project, the Terminator series, the very loose film adaptation of I, Robot, and The Matrix series.” (http://www.liquisearch.com/technological_singularity/in_popular_culture)

The theory of the singularity has grown a lot in popularity in pop culture. There are multiple blockbuster movies and science fiction novels that focus upon the singularity and its repercussions or a post-apocalyptic future in which machines take over. These have even surpassed popularity of the zombie and alien apocalypses.`

The theory of the singularity has grown a lot in popularity in pop culture. There are multiple blockbuster movies and science fiction novels that focus upon the singularity and its repercussions or a post-apocalyptic future in which machines take over. These have even surpassed popularity of the zombie and alien apocalypses.`How would we survive the Technological Singularity?

Sources:

http://nypost.com/2014/02/24/robots-will-be-smarter-than-humans-by-2029-scientist/

The sheet you gave us

The sheet you gave us

No comments:

Post a Comment